The Walk of Change

Creating an interactive art experience

Timeline

12 Weeks

March - July 2019

Project type

Study project

My role

Technical development lead

Tools

Microsoft Kinect

Processing, Java

Skills

Project Management, Java development, Kinect development

Outcome

Interactive art experience for an exhibition

The Walk of Change is an

interactive art experience

01 Overview

In the sixth semester of my studies, we had the task to design and implement an interactive art experience. Our professors gave us two projects to work on. An interactive wall, or an interactive floor experience. We decided to go with the second and created an interactive floor which changes as soon as people walk over it. The finished project was shown at the futurological congress exhibition at the Technical University in Ingolstadt.

if ( art != product ) {

HowToStart( person[9], weeks[12] ); }

02 The Challenge

For this project, it was more about creating an interactive art experience with a “wow-factor”, rather than a real product. Organizing ourselves for this in a team of 9 people with different work preferences was something we had to figure out at first.

Coming up with ideas

Ideation and Research

04 Ideation

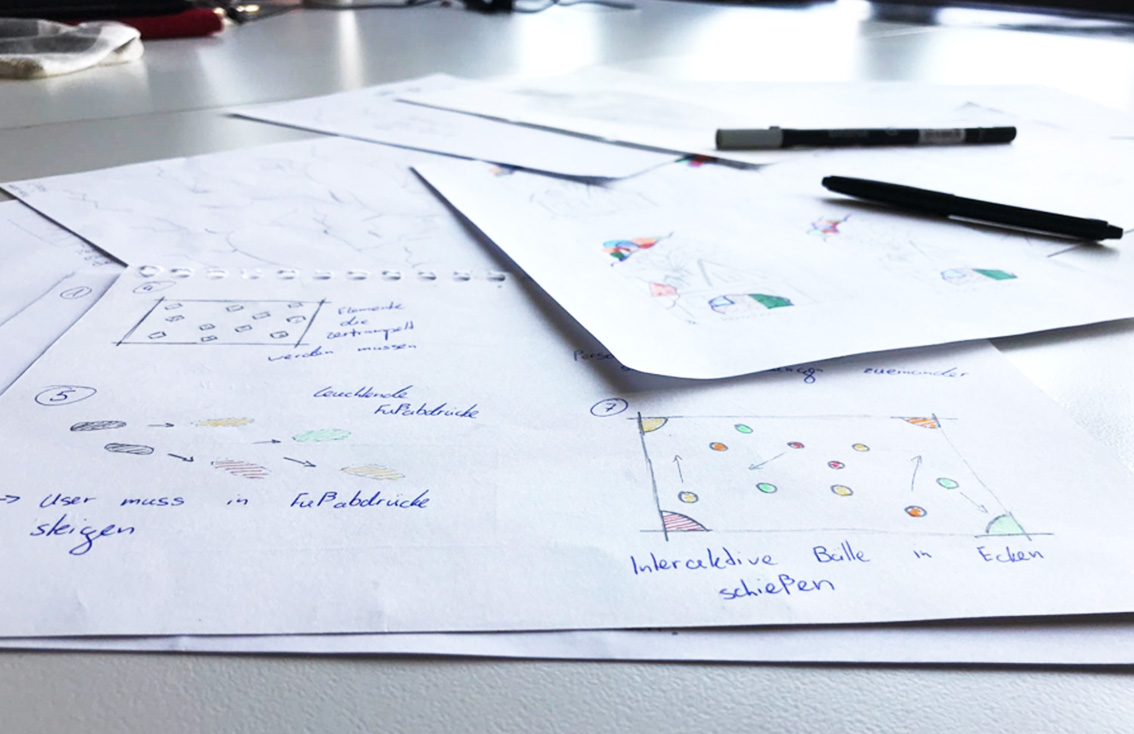

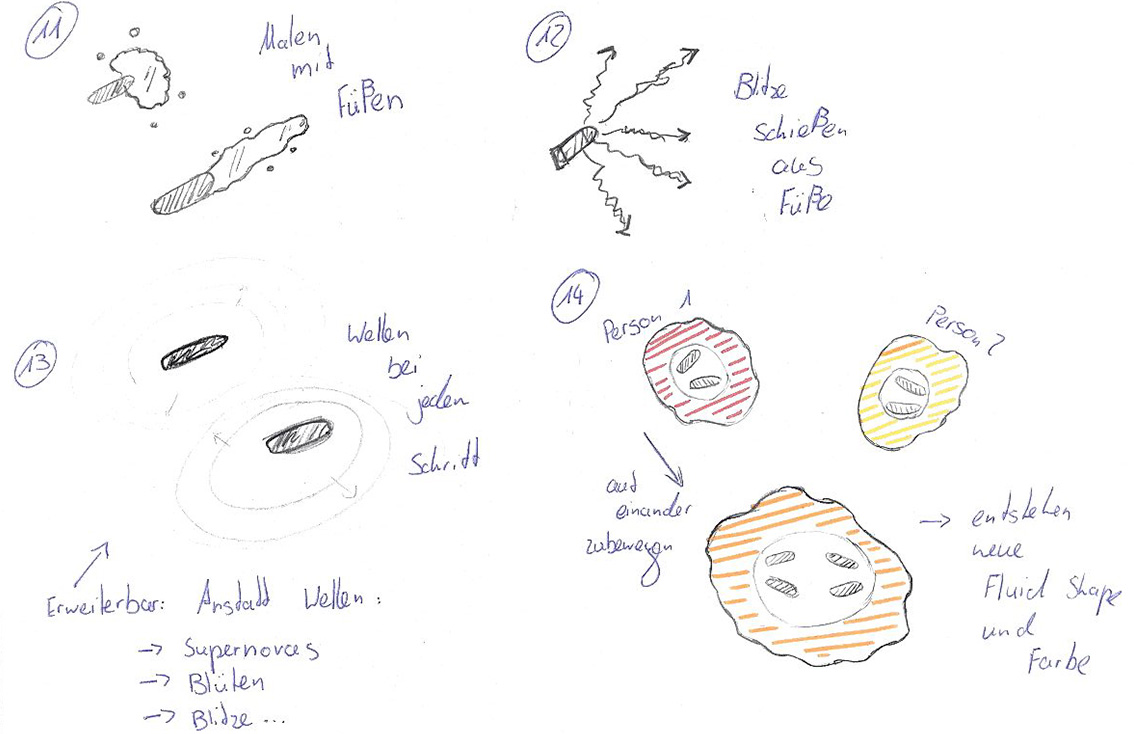

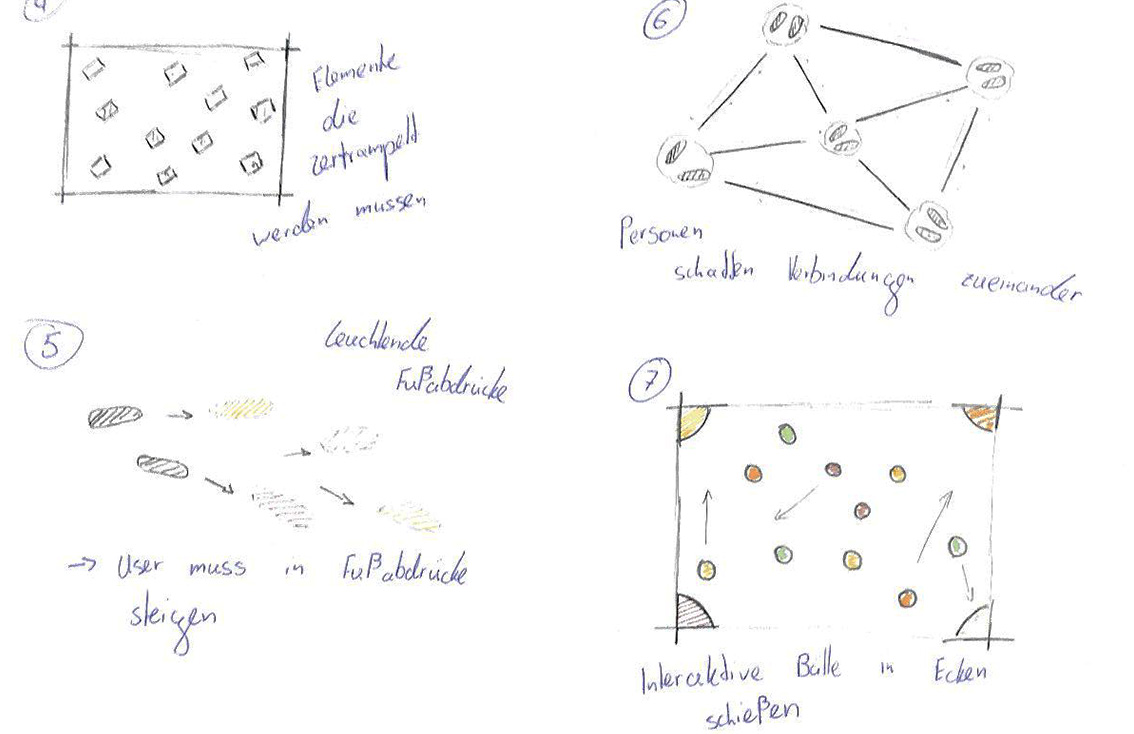

First, it was about the elaboration of a concept. Everyone started with researching some interactive experiences they liked, adjusted the idea according to our project, sketched them and described her or his ideas in front of the team. We then voted between the proposals and wanted to work further on two concepts.

The technological

side of life

05 Technologies

For the development of the floor, we decided to use a Microsoft Kinect which was attached to a wide range beamer on the ceiling and pointing towards the floor. If people walk under it, they should be detected through the Kinect depth sensors. First, we thought to develop everything within Unity but eventually decided to go with processing, because we found multiple tutorials online and everyone in the development team could write java code (compared to C# in unity).

Measuring a test floor

Preparing a test beamer and Kinect setup

Teamwork

is essential

06 Development

We initially operated in a team of 9 people and then decided to split up in smaller groups and work parallel on the different process phases. I was part of the development group and the technical lead of this project. We then further split up the development tasks. Two people worked on a prototype of the second concept, one person on the prototype of the first concept. I was already familiar with the Kinect, so I worked on the development of a blob depth detection program.

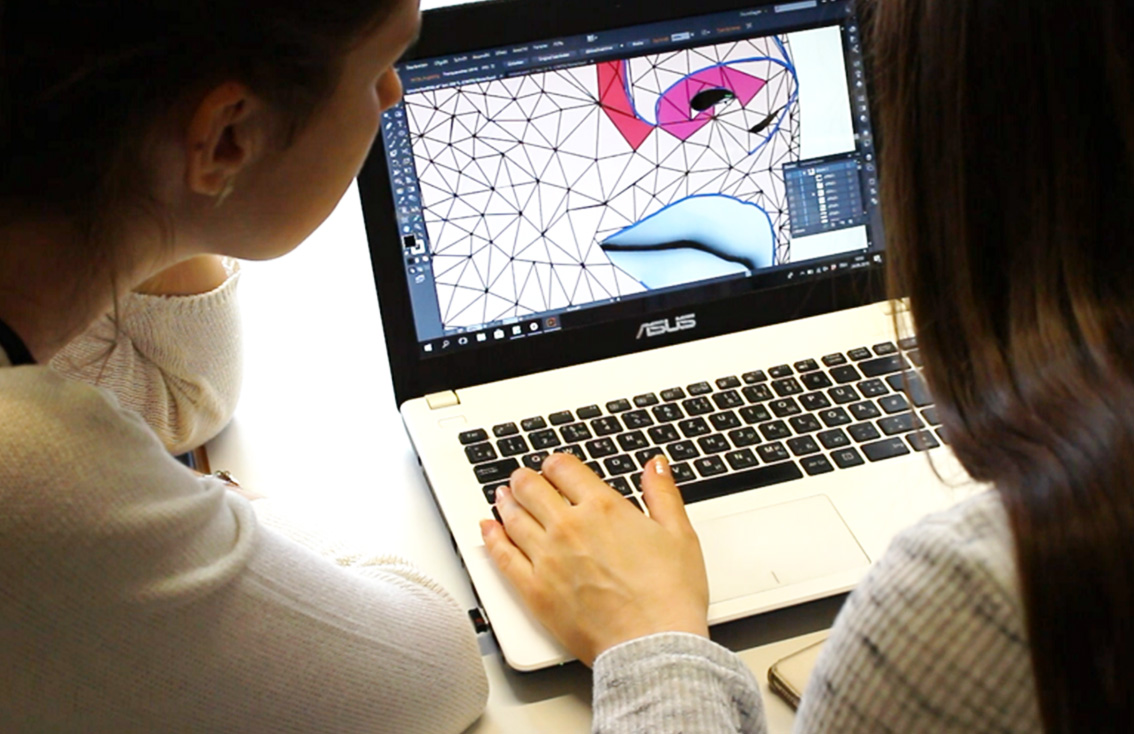

The design team creating visual floor design

The development team creating applications

The "Failure"

Concept one

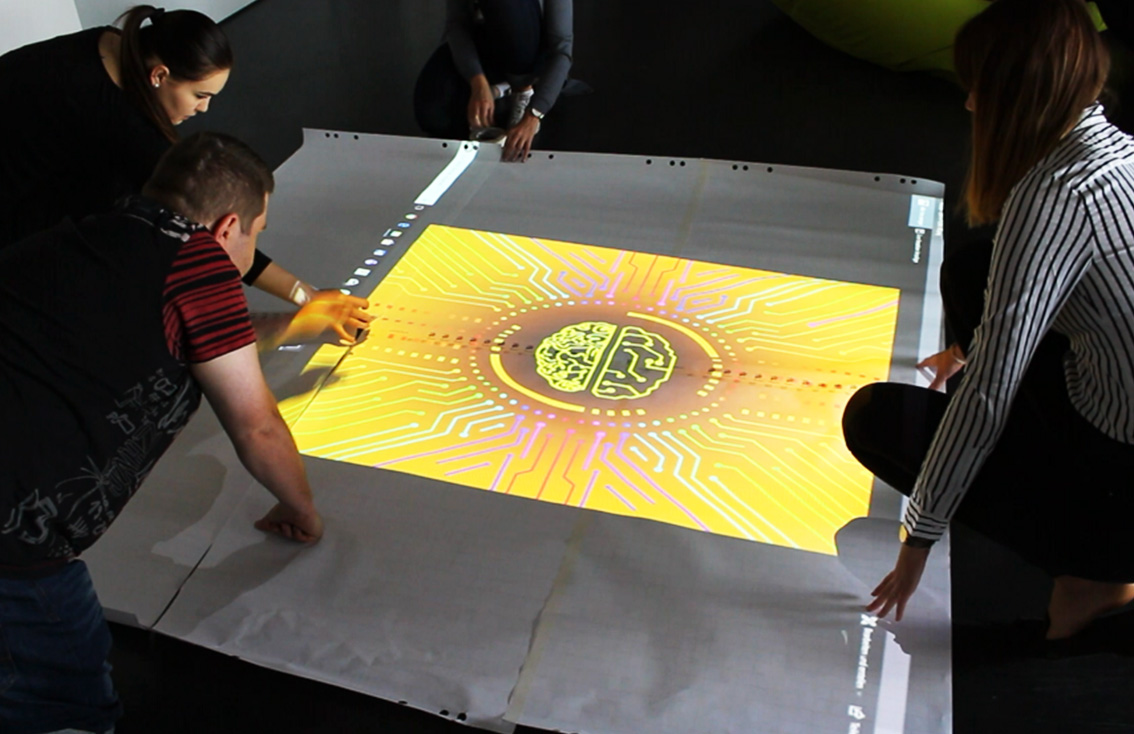

The first concept was an Animation that starts at the point on which a person is standing or walking and evolves to the middle of the floor. For this, the Design team created an animation of a futuristic brain that receives some datastream from where the person is standing on. It looked really awesome.

Visual design concept one

Why it didn't work out

some mismanagement

It was mostly because of my fault. I thought that we just merge our different types of codes together at the end and it would work out. Unfortunately for Concept one, it didn’t. I planned way to less time for the final merging of the codes and the possible necessity of troubleshooting errors. In the end, we had to abort the first concept and reuse the design for the second concept.

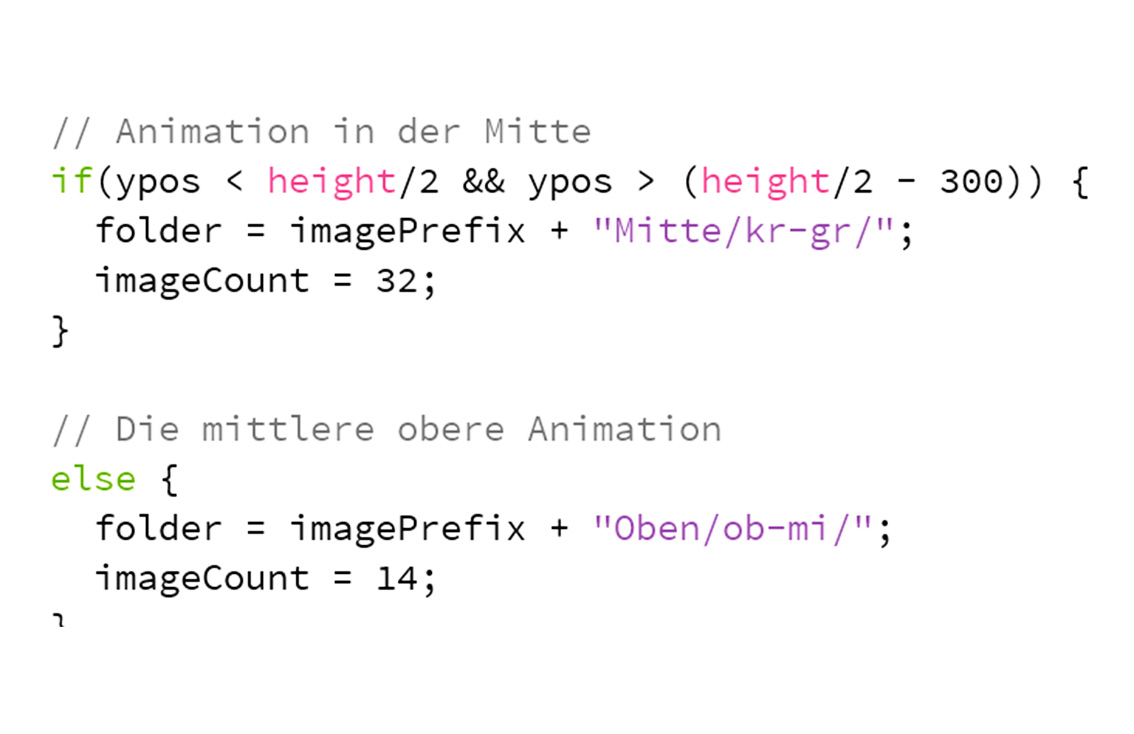

Code snippet concept one - checking click position and preparing animation

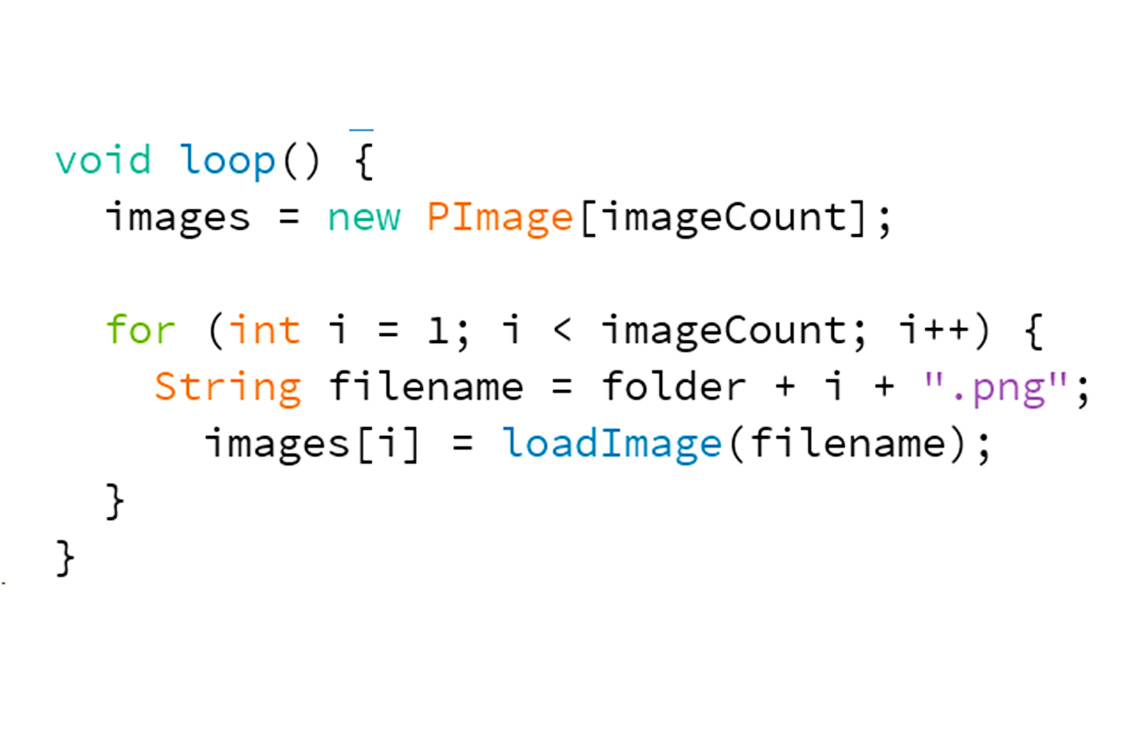

Code snippet concept one - creating image array, searching folder and looping images

The problem

communication

The prototype of the first concept was built upon single mouse click events. It took the X- and Y coordinates of the click and from there fired up a png-sequence as an animation. During the sequence, it couldn’t handle any other events, or the animation would immediately stop. My Kinect blob detection, on the other hand, was constantly sending x-and-y coordinates of the detected persons in the scene. The prototype was simply not built for that and it was already too late in the process to rewrite everything.

Concept one prototype - single click event animation

Kinect blob detection prototype - constantly detecting my hands as blobs

The success

Concept two

The second concept was an image that was black and white and as soon as a person walks over it gets colorized. Again, the Design team made a great job. Based on origami design, they created a robot head that included various shades of blues and achieved a great look when colorizing.

Visual design concept two

Why it worked out

Teamwork and good karma

The other guys did a fantastic job with programming the prototype. It was based on iterating over all the pixels and check if the cursor was hovering over it. This could easily be combined with my blob detection. If a blob aka a Person was detected by the Kinect, the Program took the coordinates and colorized the image in an 80px square around it. Through a coroutine, we could then again decolorize the square after a certain time and achieve an endless interactive experience.

Concept two prototype - Colorize on hover

Concept two prototype combined with kinect detection

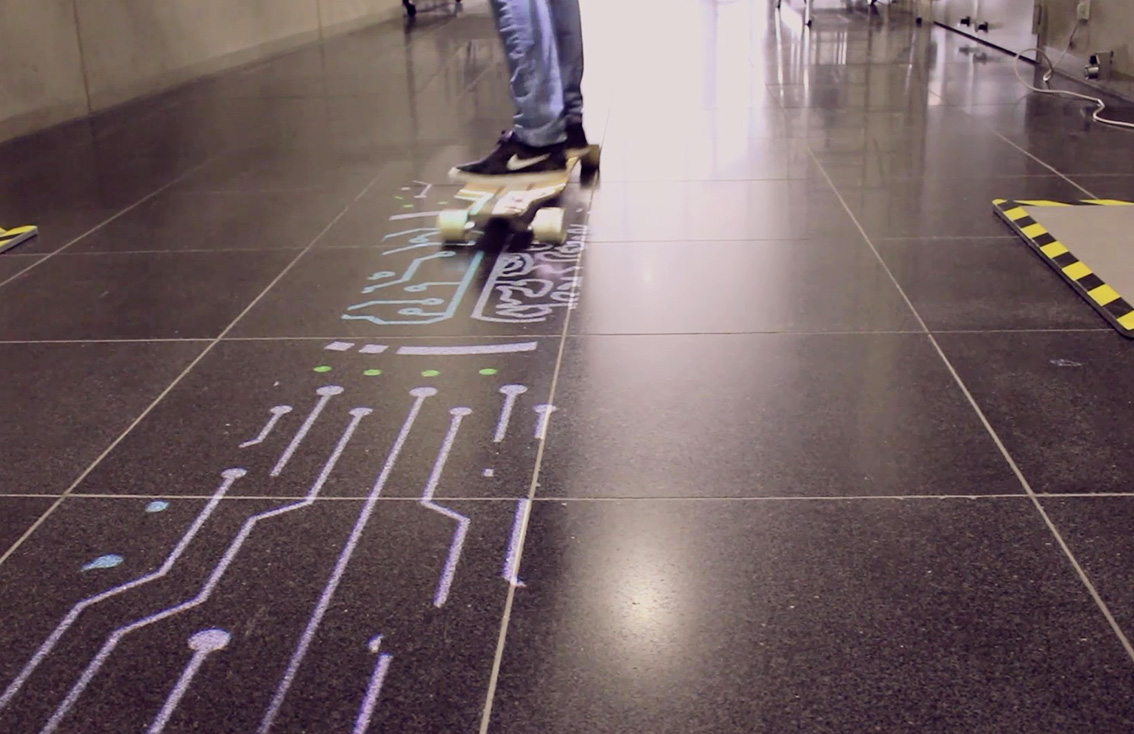

The Installation

Helping each other

06 Installation

For the installation of the interactive floor, we got some help from the university as well as the city theatre. We received a wide-range beamer as well as a scaffold to put it on. The Kinect was attached to the beamer and the stereo system was installed. Everything turned out really well.

Making the last refinements

Setting up the final installation

Great project with a great team

Conclusion

07 Conclusion

Overall this project was a really great one. The visitors at the exhibition were amazed by our floor and we received a lot of great and positive feedback. Shoutout to all my teammates, as well as my professors Ingrid Stahl, Georg Passig and Stefan Schäfer. It was a pleasure working together with you!

What I've learned

management and communication

08 Lessons learned

Of course, it was hard to abort the first concept, but looking back I learned from it that all teammates should stay in touch regularly. As a project lead, you should keep everybody in the loop and use weekly or daily meetups to synchronize what everybody is working on. Combining the results regularly by using an agile management approach would have helped to identify flaws and errors in the early stages and could have rescued concept one.

Still curious?

Check out some of my other projects

Exploring the possibilities of brain-computer interfaces

Automotive brain-computer interface

As a trainee at icon incar I got the chance to work on the integration of a brain-computer interface into cars by developing a prototype with the Emotiv Epoc+ and Unity.

TRAINEE PROJECT • 8 WEEKS • NOVEMBER 2018 • READING TIME 4 MIN

Entertainment experiences for autonomous driving

Audi entertain vision - case study

During my last semester, I got the chance to write my bachelor thesis in cooperation with Audi's HMI team including UX research, UX design, visual design, prototyping, and Unity.

BACHELOR THESIS • 24 WEEKS • JAN 2019 • READING TIME 8 MIN